The concept of Artificial General Intelligence (AGI) has been a topic of discussion for the past decade, with entrepreneurs, investors, and researchers debating its potential impact on humanity. While some have expressed concerns that AGI could pose an existential threat, perpetuate biases, and erode human values, others remain optimistic about its possibilities.

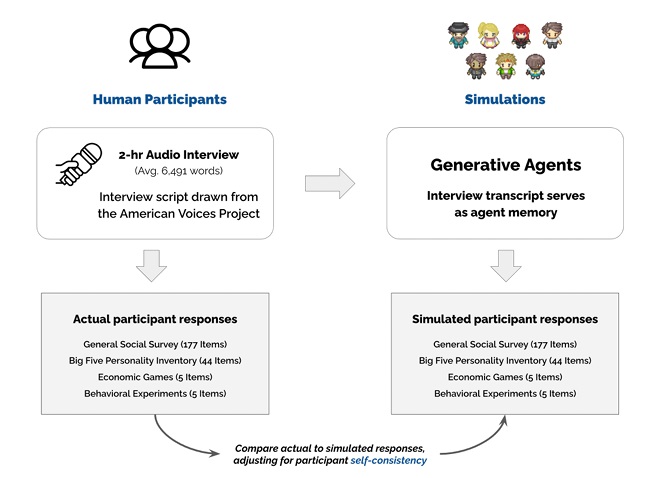

A recent paper published by researchers from Google’s DeepMind and universities such as Stanford, Northwestern, and Washington proposes the development of a generative agent simulation architecture. This innovative approach aims to create AI-powered clones, or “generative agents,” that can replicate the attitudes and behaviors of individuals, effectively thinking and acting like them.

These AI clones have numerous potential applications, including helping researchers test hypotheses and predict human responses to new laws, social changes, or other scenarios. The paper presents findings from a demonstration involving 1,000 real individuals, providing valuable insights and outcomes.

This groundbreaking research marks a significant milestone in the ongoing debate between two schools of thought: those who worry that Artificial General Intelligence (AGI) poses an existential threat to humanity, and those who believe AGI has the potential to vastly improve human lives.

Let’s understand more about the research

How did they do it?

Interviews: They talked to 1,000 real people in the U.S. for two hours each, asking about their lives, beliefs, and experiences.

Surveys and Games: Those people also answered standard surveys (like the “General Social Survey,” which asks about society, politics, and personal views) and played simple games that test decision-making (like sharing money).

AI Training: They fed the interview answers into a powerful AI (like ChatGPT) to create “digital clones” of each person.

Testing: They checked if the AI clones answered surveys and played games the same way the real people did.

What did they find?

The AI clones were 85% as accurate as the real people at answering survey questions. For example, if a person changed their answer slightly two weeks later, the AI clone matched that inconsistency.

They worked better than AI models that only used basic info (like age, race, or political party) to guess behavior. This reduced stereotypes.

Even if parts of the interviews were removed, the AI clones still did a good job, showing that interviews capture rich details about people.

Why does this matter?

Social Science: Instead of interviewing thousands of people every time, researchers could use these AI clones to simulate how groups might react to policies, disasters, or new products.

Fairness: By focusing on real people’s stories (not just demographics like age, gender, race, region, education, and political ideology), the AI avoids oversimplifying people into stereotypes.

Privacy: The data is shared carefully—basic results are public, but personal details are protected.

Example

- Imagine predicting how Americans would react to a new healthcare law. Instead of polling everyone, you could ask these AI clones (trained on real interviews) to simulate the response.

- In the same way, a big company may take survey with their employees about work from home policies and can understand their responses from their clones.

But there are limitations to this.

- The AI clones aren’t perfect—they’re still based on what people say, not what they do in real life.

- Interviews take time and might miss hidden biases in the AI itself.

- AI Clone data become enormously important and critical. Compromizing this data may be catastrophic.

Conclusion

Creating AI that can act like real people is a big step forward in how we understand both human behavior and what AI can do. This new technology gives us amazing new ways to study society and plan better policies. But we need to be careful about how we use it.

Being able to make AI copies of people that think and act like them is both exciting and worrying. It helps us better predict how people might react to changes and make smarter choices. But we also need to think hard about keeping people’s information private, making sure they agree to have their data used, and figuring out where to draw the line between real people and their AI copies.

Be First to Comment