Building on my previous article—”Is It the GPU Era or the Rise of GPU-Powered AI?“—let’s explore GPU-as-a-Service (GPUaaS) as a key revenue-generating offering from telecom operators (telcos) to enterprise businesses and adopting organizations. By leveraging their expertise in multivendor infrastructure ecosystems, telcos are set to deliver these services through collaborations with multiple vendors.

Amid the ongoing AI boom, GPUaaS represents a tremendous growth opportunity for telcos. To illustrate, here are insights from a couple of prominent research reports:

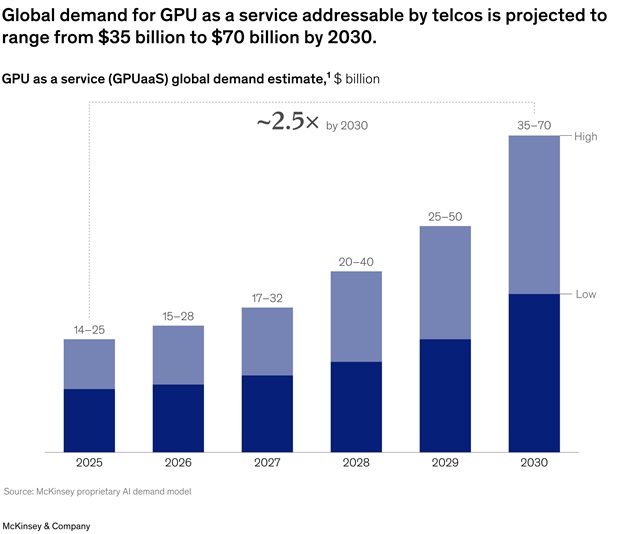

- McKinsey & Company projected that the global demand for GPUaaS that telcos can address will range from $35 billion to $60 billion by 2030.

- ABI Research forecasts that mobile operators will generate over $21 billion in GPUaaS revenue by 2030 as they develop their AI factories. Furthermore, ABI Research identifies 2027 as the pivotal year when GPUaaS revenue for telecom operators will accelerate sharply, driven by maturing infrastructure buildouts and more advanced distributed GPU computing options.

This positions GPUaaS as an emerging trend in the telecom sector.

The core focus of this discussion is the essential components—or “ingredients”—required for telcos to successfully offer GPUaaS. An insightful article from TelecomTV sheds light on this by examining the collaborations and initiatives of SK Telecom (SKT).

Here’s a breakdown of the key elements:

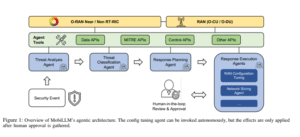

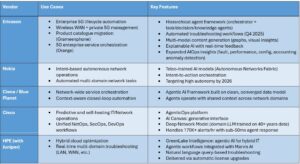

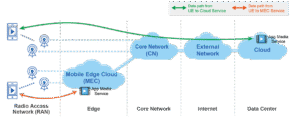

At its core, GPUaaS is more than just powerful GPUs—it’s a smart mix of computing power, integration tools, virtualization software, orchestration systems, and storage options. This setup lets telcos like SK Telecom (SKT) offer flexible, scalable, and GPUaaS for the AI world. GPUaaS shows how telcos are building large-scale AI setups by combining hardware with smart software.

- Foundation: Hardware Layer – This starts with top-tier GPUs and servers built for AI tasks, backed by data center experts who handle integration, reliability, and growth to support heavy AI needs.

- Middle Layer: Virtualization and Cloud OS – These tools allow flexible splitting of GPU groups and smart resource sharing, helping telcos deliver GPUaaS efficiently.

- Management Layer: Orchestration and AIOps – These automate the full process, from setup and monitoring to optimizing AI jobs, making everything run smoother.

- Top Layer: Storage and AI OS – Advanced storage and AI-focused systems provide speed, security, and separate spaces for quick, safe, and isolated AI work.

What do you think—does this tech stack miss any key pieces? Is there an extra layer telcos need to make their GPUaaS offerings truly complete?

In my next article, I’ll take a broader view and share insights from other telcos to build a more complete picture. Stay tuned!

Be First to Comment